About Me

I am currently focusing on intersection of Signal Processing and Machine Learning. I design hybrid models and bioinspired regularizations (based on Sparse Coding (DLSC), info theory, eigenvalue theorem, Frame Theory, Zipf, Probability, ...) to regularize Deep Neural Networks (Transformers, Diffusion models, LSTM, ConvNet, VAE, ...) in order to learn more suitable filters, representations and discriminations for data and labels at hand.

I am currently focusing on intersection of Signal Processing and Machine Learning. I design hybrid models and bioinspired regularizations (based on Sparse Coding (DLSC), info theory, eigenvalue theorem, Frame Theory, Zipf, Probability, ...) to regularize Deep Neural Networks (Transformers, Diffusion models, LSTM, ConvNet, VAE, ...) in order to learn more suitable filters, representations and discriminations for data and labels at hand.

Right from my third BSc year, I began taking basic graduate level CS courses (Computer Vision, Machine Learning, Statistical Estimation, fuzzy,...) as optional courses with A scores. So far, I have experiences not only in providing new specific-purposed theories in metric learning (modified dictionary learning in cognitive time-series analysis, new ICA applied to Word2Vecs, ...), but also in general-purposed theories (heuristic initialization of Gradient-Descent applied in BCI classification study, parameter fine-tuning, ...).

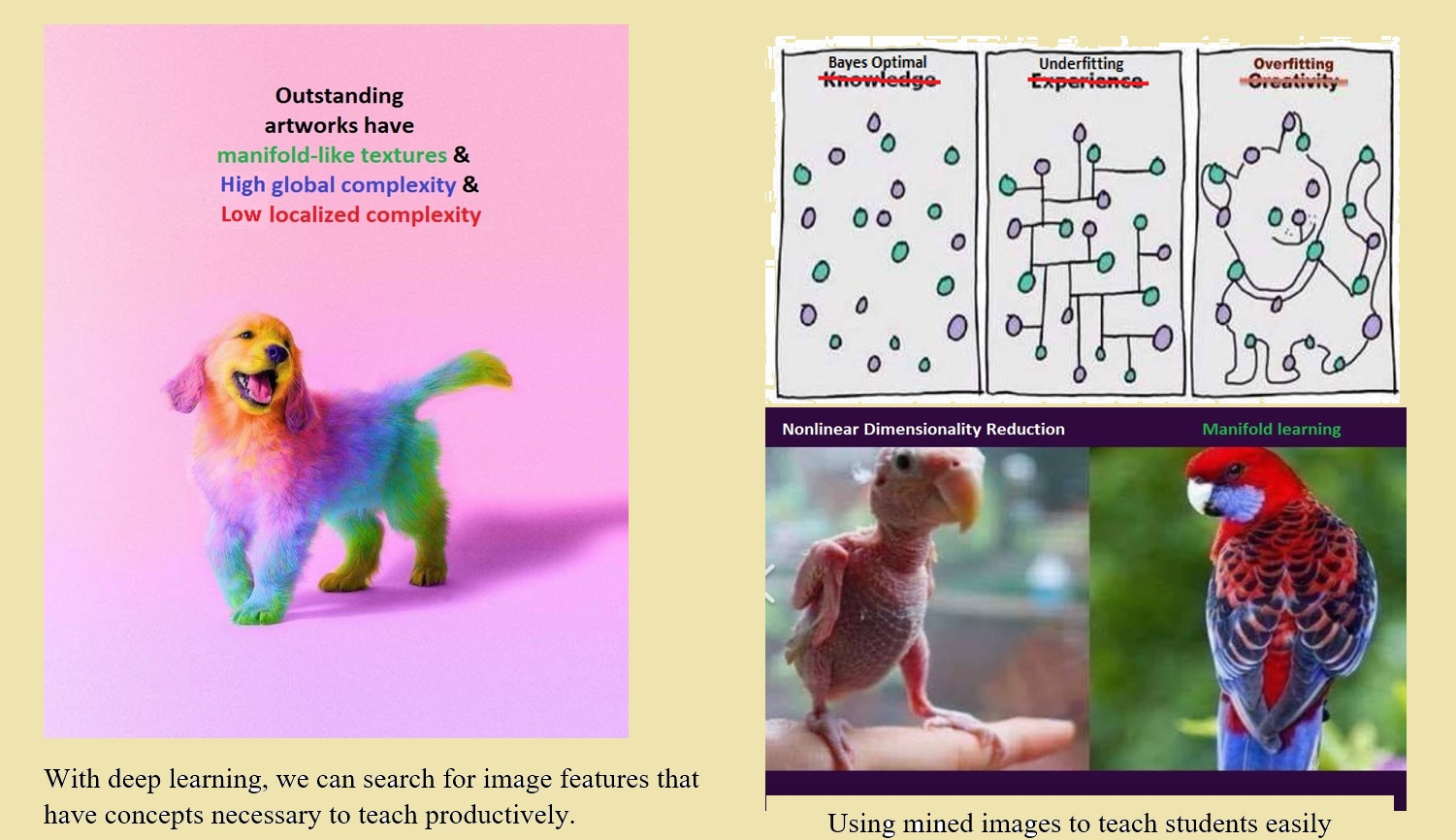

Currently, by equipping Deep Learning models with Manifold Preservers, Constraint-Regularizers and metric learning approaches, I am improving accuracy of NLP (especially Word2Vecs and Knowledge-graph-Embedding), BCI (especially cognitive time-series in language applications), and image/text-nets.

Updates on occupations:

- 2022 : Head Of Medical Data Analytics BSC/MSc group at Queen's University

- 2021-2022: Internship -- Pfizer Canada, Project:: “Prevalence of Moderate-to-Severe Osteoarthritis Pain”

- 2022 : Internship -- Medlior Health Outcomes Ltd, Project: “Diagnosis of Early Alzheimer’s Disease”

- 2020-2022: Graduate Research Assistant at BAMlab, Queen's Computing, Kingston, ON, Canada

List of my Deep Learning projects, conducted in the last year:

- Classification of objects using a modified InceptionNet cascaded with reliability metrics

To improve precision of classifying low quality image objects with precisions over %90, I have designed a metric learning method based on CNN output entropy and variance, to prune uncertain decisions.

- Hybridizing Faster-RCNN with a modified cascade classifier for object localization and classificartionFaster RCNN with its distributed representation, has a powerful generalization. For improving model's capability to attend to details, an entropy-based HAAR cascade trainer is combined with FRCNN.

- Embedding higher-orders of entity adjacencies by a new deep Maximum Dependency Minimum Redundancy frameworkIn this project, shared representation between different orders of Knowledge Graph adjacencies is sought using a new Maximum Derpendency Minimum Redundancy (MDMR) framework. There are a lot of redundancies in the knowledge graph entities interrelations, per each order of contingency that should be removed. Deep Independent Component Analysis is a powerful bioinspired deep model, for reducing redundancy in data for finding the minimal number of principal latent dimension. I used Bengio's NICE deep neural net for Minimum Redundancy aspect of MDMR. For Maximum inter-adjacency-order dependency, I used Maximum Entropy and Cross-Correlation functions. The resulted embeddings improved Knowledge Graph Completion in Freebase substantially.

Thanks to my heuristic instinct, I can ideate holistic approaches to design Hybrid Learning-Based-Rule-Based models for both generation and identification.

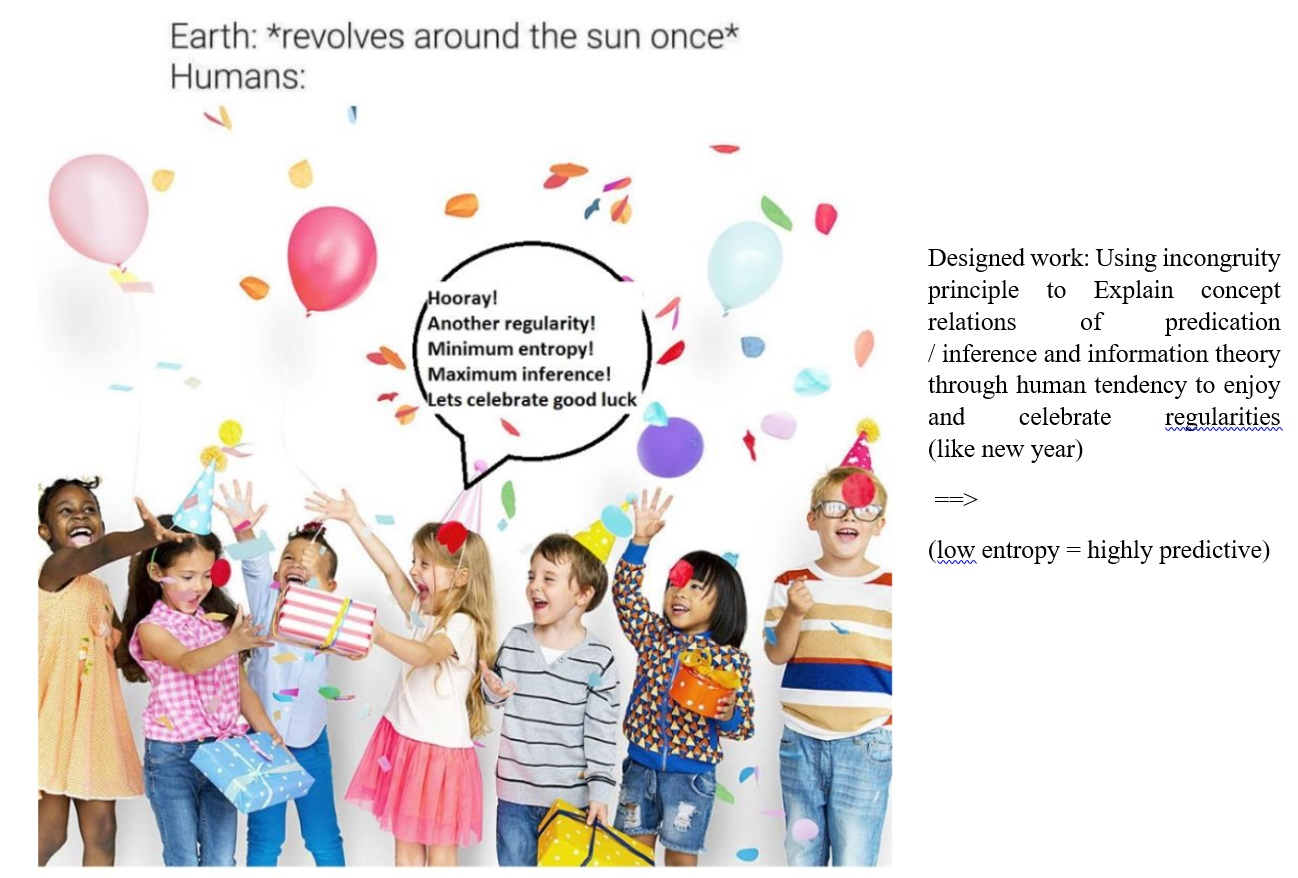

Thanks to my multi-disciplinary instinct, I design inductive biases suitable to application at hand by my knowledge in cognitive neuroscience (i.e. Zipf, Least-Effort, ...) and communication theory (Shannon Optimal Bounds).

I am passionate in developing new objective-function terms and context-dependent regularizations complementing existing models of Contextual-searching, Question-Answering, and Recommender-Systems by Multi-task Learning and cross-language IR. Adding constraints and regularizations like unsupervised manifold preservers, scale-adaptive, hierarchy, modularity,... in a modified way is what I am skilled at during my past projects. .

My CS graduate dissertation poster in a glance I have also developed the following methods for interpreting multimodal affective data (containing image, text, and audio), which have been regarded less by the literature:

- Info-theoretical, unsupervised-manifold, and

-Modified Bi-query Multimodal Transformer.

- Canonical Correlation Analysis in deep fashion for domain adaptation

- Learning universal multimodal neural language model, by tokenizing clustered audio and visual representations in teh latent space. (Motivation: The brain processes all modlities in tokens, and time reshapes all modalities after a while.)

B.Sc. and M.Sc. Projects:

My Electrical Engineering (Shiraz University) B.Sc. Project was "Full Simulation and Implementation of Persian text to speech" supervised by Prof. R. Sameni . For the poster (in a glance) click here.

My Bioelectrical Engineering (Tehran Polytechnic Uni.) M.Sc. project was "Improvement of classification of speech imagery in BCI using dictionary learning methods" under supervision of Prof. MH. Moradi. For thesis poster (in a glance), click here.

More information on my M.Sc thesis:

- Regularization learning for supervised data driven dictionary learning

on EEG (extracting useful subspace of features, manifold preservers (compresssive aspect,

denoising and BSS aspect, SSS aspect), tensor decomposition, distance

metric parameter learning (info theoretical aspect))

Papers on: (Click on each to download.)

- Independent component analysis approach using higher orders of Non-Gaussianity (Published) (Click for Presentation)

- Bilinear biased variation of ProjE improves Knowledge Graph Completion in linear and bilinear models (Published)

- Controller parameter tuning by new metaheuristic (Published)

- Conflict Monitoring Optimization, Heuristic Inspired by Brain Fear and Conflict Systems (Published in Persian, Submitted)

- Localized control of memory usage by Observer Effect Optimization: Application to real-world learning/tuning applications with highly nonlinear function space (Submitted)

- Modified swarm-based metaheuristics enhance Gradient Descent initialization performance: Application for EEG spatial filtering (Submitted)

- Uncertainty Principle based optimization; new metaheuristics framework (Needed paraphrasing)

- Genetic-TLBO Algorithm to Solve Non-Linear Complex Problems for Life Quality Improvement (published)

- Precise classification of low quality G-banded Chromosome Images by reliability metrics and data pruning classifier (Submitted )

- New constrained word2vec dictionary pairs learning (Constrained GloVe using Locally LInear Embedding for capturing neighborhood info) (Is being processed)

- Controlling bi-linearity degree of entities and relations in knowledge-graph embedding by modifying ProjE. (Is being processed.)

- Knowledge graph embedding by maximum dependency minimum redundancy over long-term and short-term relationships. (Is being processed.)

Experience and favorable results on:

- -Constructive review about the paper: "“Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank”, Socher et al. Main paper is in the link:

- Ergonomic Text Translator and Bilinear vaiation of SOTA Knowledge Graph Completion method. For their visualized instruction, source codes, and other Machine Learning methods, please visit this Pdf link.

- Time series feature learning

- Samples for instructive phrases for instruction of lectures, using semi-automated multimodal analysis and concept mining .

- Word reordering feature design for ID3 decision tree, CART for creation of a machine translation GUI software shown below.

-

ID3 on persian speech phonemes (In the B.Sc thesis, first a dataset of

phonemes is derived and then phonemes got classified by a ID3 based part

of speech tagger.)

- EEG features wrapper (Implemented in the application part of 2nd mentioned paper in the papers section.)

-

Age progression of face using active appearance model of regularized

support vector regression subspace. (an example of age progressed faces

from young to old is in the following picture.)

Insights and new ideas on + enough knowledge on:

- Regularizations that preserve representative information in all layers of deep supervised neural net.

- Metric learning /manifold preserving in deep / recurrent NN language models

- word2vec embedding using dictionary learning, ICA and manifold preservers: ADMM or SVD form

- Knowledge graph embedding + combination with exact dynamic programs + word2vec combinations

- Piece-wise linear regularization on unsupervised models + deep structure designers

My ongoing projects:

- Multimodal Fusion of texts and images:

--- Coupled Auto-encoder for shared latent space embedding of images and texts mined from Amazon website

--- Deep Joint Dictionary Learning with LLE constraint and layer-wise Canonical Correlation Analysis metric

--- Multimodal Knowledge Graph Embedding with LSTM and word-imagePattern adjacency as statistical attention mechanism

- I develop knowledge graphs embedding and

word2vec by a neural language model that learns how to attend to

ensembles of deep networks that each regards different scale of data.

- I am working on ensembles of language models that are

coupled together to get accuracy of interest by sacrificing complexity.

This takes place with help of adaptation of hierarchical clusters or

decision trees that each is more accurate on its own locality scale.

- And finally, I'm

developing a graph of an adaptive version of Noisy channel model (e.x.

Ngrams) that are tuned with help of information gain and Independent

Component Analysis to raise accuracy of SMT systems. Furthermore, I'm

training a deep neural net to learn how to traverse the graph.

Programming skills:

Python , 9 years

C++ , 6 years

Matlab , 9 years

My Full CV:

Mojtaba Moattari CV Github

c

For collaboration or further questions, feel free to email me.

My Google Scholar:

https://scholar.google.com/citations?user=nJtvH-EAAAAJ

My Email:

moatary@aut.ac.ir

My Linked-In:

https://www.linkedin.com/in/mojtaba-moattari-4a58b079/

My Researchgate:

https://www.researchgate.net/profile/Mojtaba_Moattari

My Github page, mostly tools I designed for improving visualizations, parameter learning and debugging in Python:

https://www.github.com/moatary

Thanks to my heuristic instinct, I can ideate holistic approaches to design Hybrid Learning-Based-Rule-Based models for both generation and identification.

- Info-theoretical, unsupervised-manifold, and -Modified Bi-query Multimodal Transformer.

- Canonical Correlation Analysis in deep fashion for domain adaptation

- Learning universal multimodal neural language model, by tokenizing clustered audio and visual representations in teh latent space. (Motivation: The brain processes all modlities in tokens, and time reshapes all modalities after a while.)